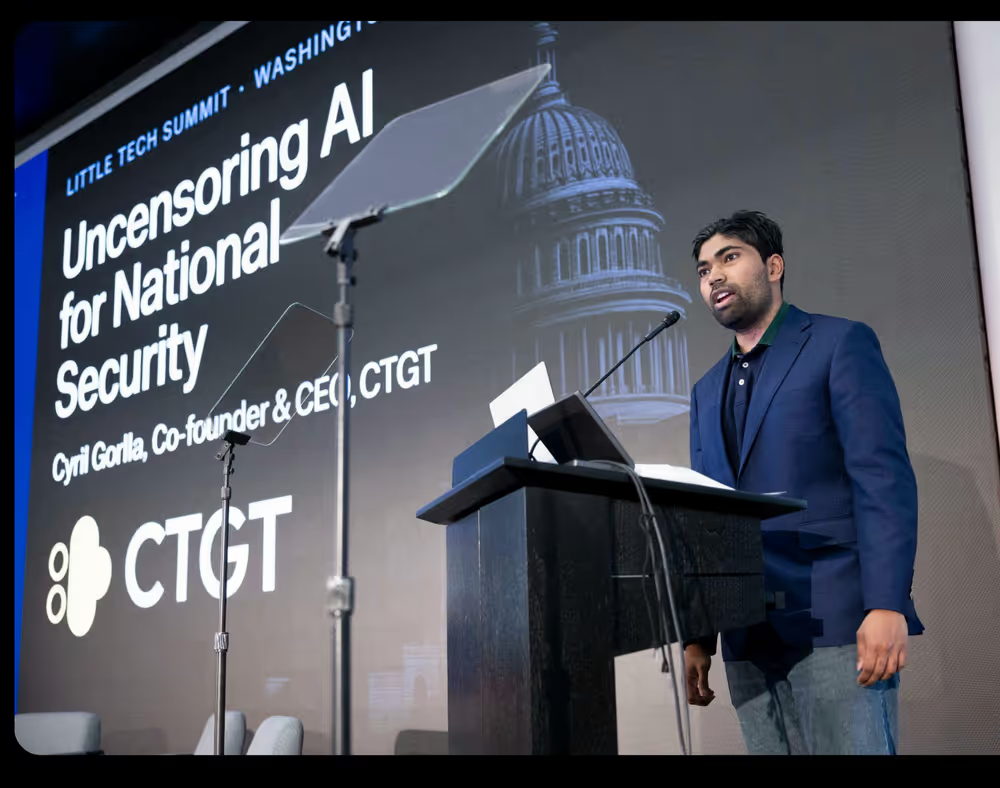

Scaling GenAI with Confidence

Moving Beyond "Guardrails" to Deterministic Remediation

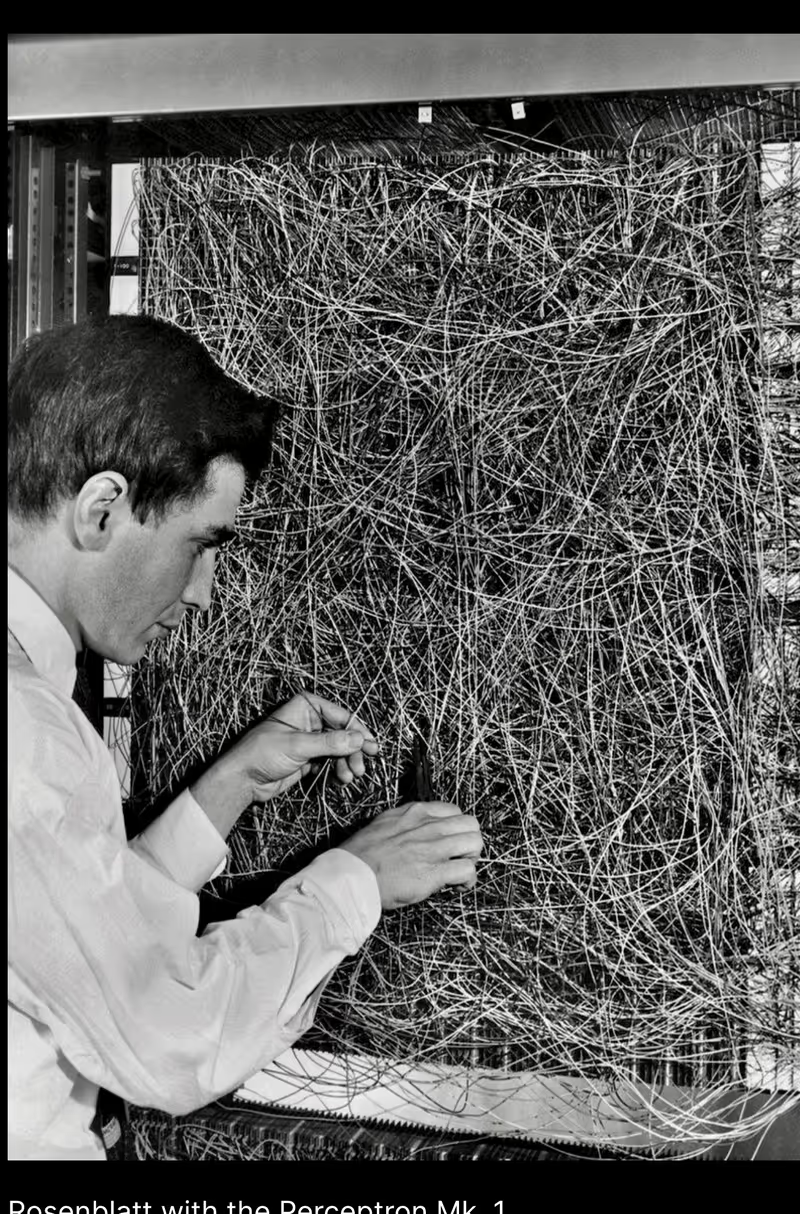

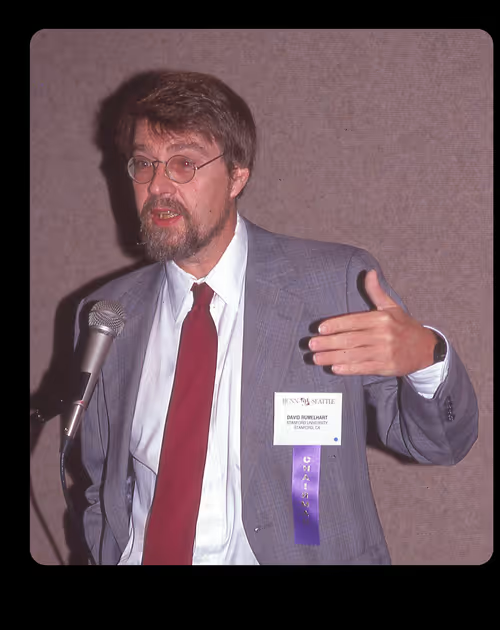

A Brief History of Learning

From the Perceptron to today's frontier models, and the persistent question of control.

From Winter to Renaissance

The 1969 "Perceptrons" critique by Minsky & Papert triggered an AI Winter. Decades later, Rumelhart and Hinton revived neural networks with backpropagation.

From Research to Production

What started as university AI research became the foundation for enterprise-grade AI governance.

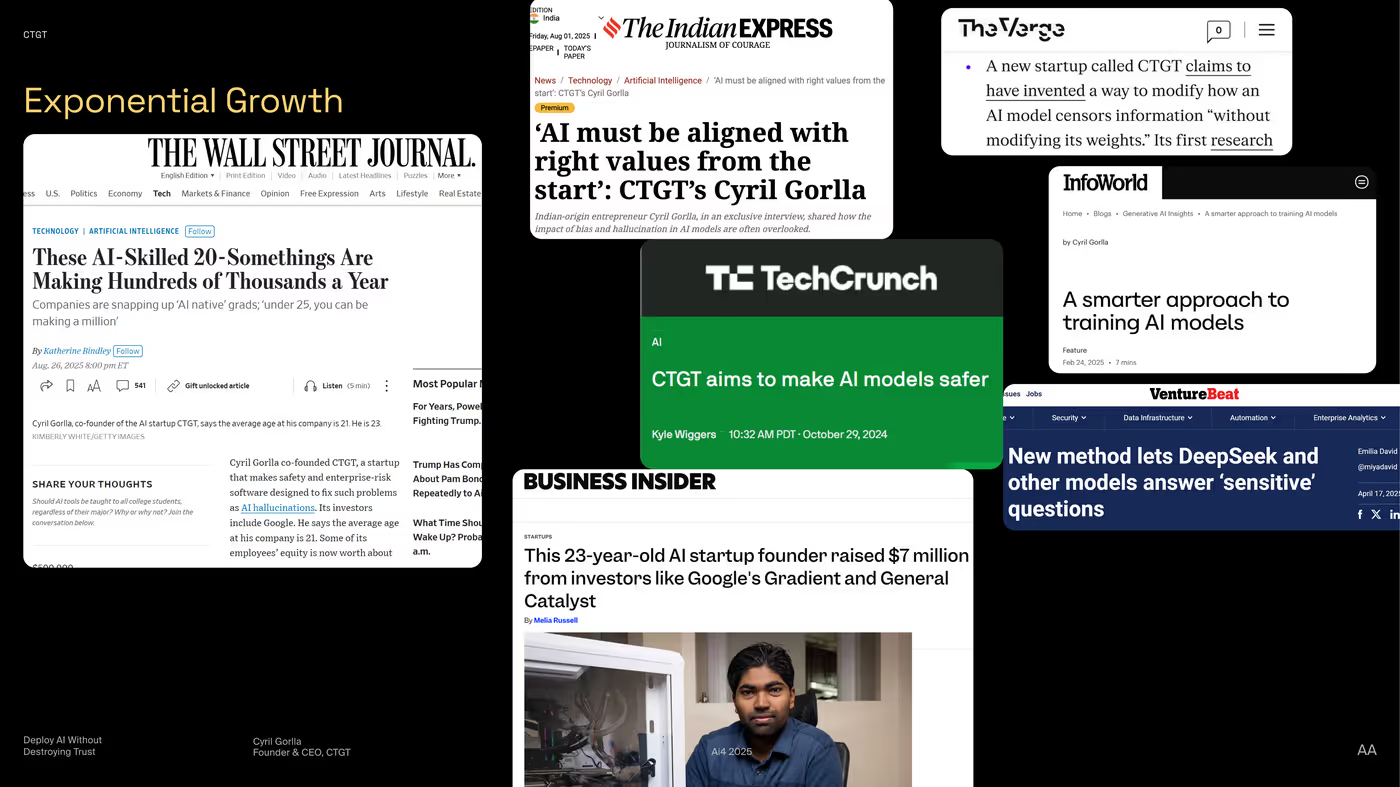

Exponential Growth

From research project to industry recognition. CTGT's approach to AI governance has captured attention across the tech landscape.

Through a Glass, Darkly

The fundamental challenge: AI models are probabilistic black boxes. We can observe inputs and outputs, but the reasoning process remains opaque.

& Documents

? Reliable ?

"It is a profound and necessary truth that the deep things in science are not found because they are useful; they are found because it was possible to find them."

— J. Robert Oppenheimer

The Black Box Trust Gap

Enterprises are stuck in "Pilot Purgatory." Fortune 500s in finance, media, and healthcare cannot move LLM projects to production because models are inherently probabilistic.

The Limits of Conventional Approaches

Prompt Engineering

Fragile at scale. Models fail 30%+ of rules when context exceeds 900 policies. Instructions get "forgotten" in long contexts.

Fine-Tuning

Expensive & brittle. $100K+ per iteration. Catastrophic forgetting. Requires retraining when policies change.

RAG Pipelines

Context pollution. RAG often degrades accuracy by introducing noise. On Claude Sonnet: 94% base → 85% with RAG.

Binary Guardrails

Kill utility. Current guardrails only block outputs; they don't fix them. You either get an unusable response or a compliance violation. There's no middle ground.

Opening the Black Box

CTGT provides a deterministic enforcement layer, not another probabilistic guardrail. We compile business rules into navigable knowledge graphs that force model compliance.

HaluEval & TruthfulQA Benchmarks

Independent benchmark results across frontier and open-source models. Note how RAG often degrades performance while CTGT consistently improves it.

| Model | Type | Base | + RAG | + CTGT | Δ vs Base |

|---|---|---|---|---|---|

| Claude 4.5 Sonnet | Frontier | 93.77% | 84.88% | 94.46% | +0.69% |

| Claude 4.5 Opus | Frontier | 95.08% | 90.87% | 95.30% | +0.22% |

| Gemini 2.5 Flash-Lite | Frontier | 91.96% | 79.18% | 93.77% | +1.81% |

| GPT-120B-OSS | OSS | 21.30% | 63.40% | 70.62% | +49.32% |

Small Models, Frontier Performance

CTGT's Policy Engine enables smaller, cost-efficient models to match or exceed the base performance of the most expensive frontier systems.

Real-World Examples

Query: "Where did the Olympic wrestler who defeated Elmadi Zhabrailov later go on to coach wrestling at?"

"The context does not mention where Kevin Jackson went on to coach wrestling."

Correctly traces "he" to Kevin Jackson → Iowa State University

Query: "In what year was David Of me born?" (typo for "David Icke")

"I cannot answer. The text does not contain information about 'David Of me.'"

Recognizes typo, maps to "David Icke" → 1952

This is the reliability required for financial compliance, legal review, and healthcare applications.

The TCO Revolution

Moving away from fine-tuning, complex prompting, and RAG pipelines doesn't just improve accuracy. It dramatically reduces Total Cost of Ownership.

LEGACY APPROACH

- Fine-tuning iterations: $100K+ each

- RAG infrastructure overhead

- 1-2 week policy update cycles

- Engineering maintenance burden

WITH CTGT

- No fine-tuning required

- Minimal infrastructure footprint

- Real-time policy updates

- 10x engineering velocity

ADDITIONAL BENEFITS

- Eliminated risk windows

- Full audit trail

- Model-agnostic deployment

- Weeks to production, not months

Where This Matters

Financial Services

SEC Reg BI compliance, fiduciary duty enforcement, investment advice validation

Legal & Compliance

Contract review, regulatory filing validation, due diligence automation

Healthcare & Life Sciences

Clinical documentation, HIPAA compliance, medical information accuracy

See It In Action

Experience deterministic remediation yourself at playground.ctgt.ai

What You'll See

- Upload any policy document

- Watch it compile into knowledge graph

- Query the model

- See real-time remediation in action

- Trace every claim to source

Try These Scenarios

- Compliance policy enforcement

- Style guide adherence

- Factual claim verification

- Multi-step reasoning validation

- Error tolerance and recovery

The Path Forward

- Scope initial use case

- Benchmark accuracy & TCO

- Prove deployment model

- Roll out to adjacent units

- Add new policy sets

- Prove multi-domain value

- Central governance layer

- Model-agnostic control

- Full audit capability

Key Takeaways

The Black Box Problem is Solvable

Deterministic enforcement layers can open the black box through active verification and remediation, not interpretability research.

RAG Isn't the Answer

Context pollution often degrades model performance. Policy-driven verification improves accuracy without adding noise.

Small Models Can Beat Big Ones

With proper governance, open-source models can exceed frontier model baselines, dramatically reducing TCO at scale.

Production is Possible Now

Pilot purgatory is a choice, not an inevitability. Deterministic governance enables confident enterprise deployment today.

Deploy AI Without Destroying Trust